Tech

The Content-Creator Angle: Using an AI Music Generator as a “Soundtrack Workflow,” Not a Toy

Published

4 hours agoon

By

Admin

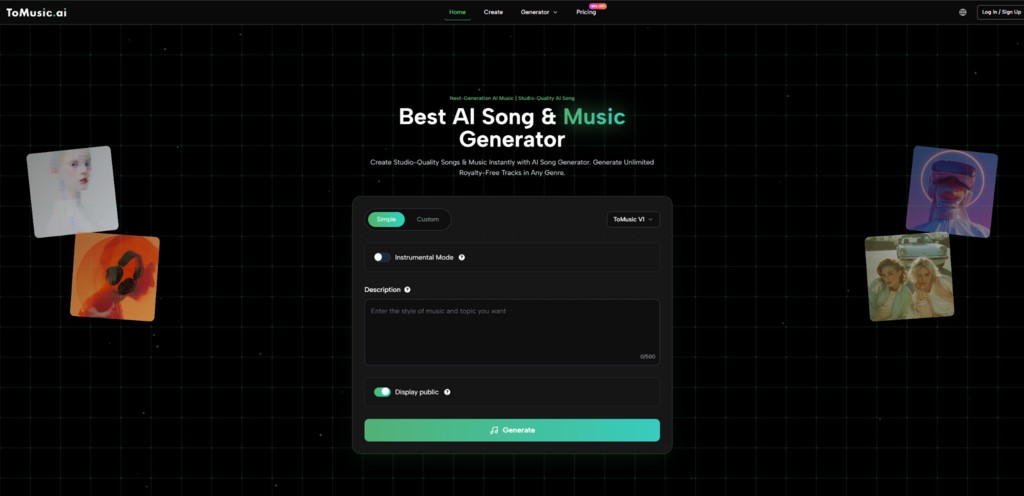

If you make videos, podcasts, short-form clips, ads, or even product demos, you’ve felt this pain: you need music that matches the moment, but licensing takes time, stock tracks feel overused, and custom composition doesn’t fit the schedule. I started looking at AI music generation less like “making songs” and more like building a reliable soundtrack pipeline.

That’s the context where an AI Music Generator felt most practical to me—especially when you treat it as a repeatable workflow rather than a one-off trick.

The Real Problem : Your Story Moves Fast, Audio Falls Behind

- Problem: Your content is ready, but the music doesn’t match the pacing, tone, or brand vibe.

- Agitation: A slightly wrong soundtrack can make good visuals feel cheap, or emotionally “off.”

- Solution: Generate multiple tailored drafts quickly, pick the best, and keep your production moving.

In my own use, the biggest shift was that I could generate several options for the same scene—“calm tech review intro,” “high-energy reveal,” “gentle outro”—and choose the one that actually fit the edit.

A Better Mental Model: Treat It Like a Music Department

Most creators don’t need “a masterpiece.” You need:

- consistent mood,

- clean structure,

- and the ability to iterate.

In that sense, Lyrics to Song AI works like a lightweight music team: it produces candidates, you curate, and you refine.

What You’re Actually Controlling

Even when the interface feels simple, you’re influencing real production decisions:

- Genre: the overall musical language (pop, cinematic, lo-fi, EDM, etc.)

- Mood: emotional color (uplifting, tense, dreamy, dark)

- Tempo: energy and pacing

- Instrumentation: texture (piano, strings, synths, guitars, drums)

- Voice choice (optional): whether vocals exist and what they feel like

- Lyrics (optional): narrative specificity and hook direction

What I found: the more you describe the role of the track in the content (intro bed, montage driver, emotional lift, outro), the more “usable” the results tend to feel.

The Before/After Bridge: Stock Tracks vs. Custom Drafting

Stock libraries are convenient, but you’re selecting from what exists. AI drafting flips the flow: you generate what you need, then select from your own variations.

That matters because content is specific. The best soundtrack isn’t “good music.” It’s music that matches your pacing, your story beat, and your emotional transition.

A Comparison Table (Focused on Creator Needs)

Here’s how the workflow difference shows up in day-to-day creation:

| Creator Need | Common Approach | AI Drafting Approach |

| Quick intro theme | Search stock catalogs | Generate multiple 10–20 sec “intro-ready” drafts and pick one |

| Matching scene pacing | Time-stretch/edit existing tracks | Regenerate with clearer tempo + intensity guidance |

| Avoiding “heard it before” music | Dig deeper into catalogs | Produce fresh variations from your own prompt |

| Brand consistency across episodes | Save a few stock tracks | Reuse a prompt template + tags, then iterate for each episode |

| Tight deadline | “Good enough” track | Fast candidates, then choose the best fit |

Used this way, the tool becomes less about “AI wow” and more about removing bottlenecks.

A Prompt Framework That Works for Creators

When I needed predictable results, I used a three-layer prompt structure:

1. Role

What is the track doing in the edit?

“background bed for voiceover,” “reveal moment,” “calm outro,” “product demo loop”

2. Sound palette

Name a few anchor instruments.

“warm piano + soft pads,” “clean guitar + light drums,” “punchy synth bass + crisp hats”

3. Motion

Describe how it evolves.

“starts minimal, builds slowly, opens into a bigger chorus-like section, then resolves”

This makes the result feel structured enough for content use.

Personal Observation: Where It Saved Me the Most Time

The time savings weren’t from “one perfect generation.” They came from fast variation.

Instead of spending 30 minutes searching for a track that “kind of fits,” I could generate a few options tailored to the scene and choose the one that locked in with the edit. It felt like editing music by selection, not by struggle.

Limitations You Should Expect (So It Feels Realistic)

A useful tool still has friction:

- Some generations need re-rolls: the first pass can be close but not quite right.

- Prompt sensitivity: small wording changes can swing results in unexpected directions.

- Vocal stability varies: if you’re using vocals, some styles feel more consistent than others.

- Mix balance can differ: you may want to regenerate if an instrument sits too loud.

If you’re trying to hit a very specific reference track vibe, you might need more iterations than you expected. Planning for that makes the process smoother.

How to Make It Feel “On Brand”

Consistency comes from templates. Instead of starting from scratch, create 2–3 reusable prompt blueprints:

- Brand Intro Template: consistent tempo + signature instrument

- Background Template: unobtrusive rhythm + warm texture

- Highlight Template: higher energy + brighter instrumentation

Then tweak only the scene-specific details. That’s how you move from “random AI tracks” to “a recognizable audio identity.”

Closing Thought: It’s a Workflow Multiplier

For creators, the most valuable thing isn’t the novelty of AI music. It’s the ability to keep your storytelling momentum.

When music stops being a delay—when it becomes something you can generate, audition, and refine quickly—you spend more time making decisions that matter: pacing, emotion, narrative. And your content sounds like it belongs to itself, not to a stock library.

Unlock 20% Savings with Optimized Final Mile Routing

Benjy Rostrum’s 60 Minute “Reference Track Swap”: A Music Producer’s Team Activity That Turns Creative Chaos Into a Shared Vision

The 3 Best Image to Video Tools for Social Creators in 2026

The Content-Creator Angle: Using an AI Music Generator as a “Soundtrack Workflow,” Not a Toy

Cryptocurrency Reputation Management as a Growth Engine: How Strategic ORM Campaigns Protect and Scale Crypto Brands

Four Celebrities That You Might Not Know are Advocates of Medical Cannabis

Top Mother’s Day Flower Trends in Australia This Year

One & Two Bedroom Apartments Near Me in Renton, WA: Great Options for Every Lifestyle

Building a Repeatable Sound: How AI Music Agent Helps You Create Music Series, Not Just One-Off Tracks

The Freedom Blueprint: Turning AI Video into a Full-Time Career

Who Is Kate Garraway New Partner? The Truth Revealed

Brandi Raines Net Worth 2025: Age, Bio, Career, Husband and Children

Betsy Grunch Net Worth 2025: Salary, Career, Husband, and Life Story

Salma Shah Biography: Age, Career, Net Worth, Husband and Children

Nomia Iqbal Biography: Age, Husband, Career, Net Worth and BBC Journey

Kay Crewdson Biography: Age, Date Of Birth, Husband, Children, and Net Worth

Sophia Wenzler Biography: Age, Date of Birth, Husband, Career Story, and Net Worth

Ági Barsi’s Untold Story: Sister Of Judith Barsi And Her Battle With Cancer

Mike Danson Biography: Age, Net Worth, Wife, Children and Full Story

What Is eTarget Limited? The Truth Behind Those Mystery Parcels

Unlock 20% Savings with Optimized Final Mile Routing

Benjy Rostrum’s 60 Minute “Reference Track Swap”: A Music Producer’s Team Activity That Turns Creative Chaos Into a Shared Vision

The 3 Best Image to Video Tools for Social Creators in 2026

The Content-Creator Angle: Using an AI Music Generator as a “Soundtrack Workflow,” Not a Toy

Cryptocurrency Reputation Management as a Growth Engine: How Strategic ORM Campaigns Protect and Scale Crypto Brands

Four Celebrities That You Might Not Know are Advocates of Medical Cannabis

Top Mother’s Day Flower Trends in Australia This Year

One & Two Bedroom Apartments Near Me in Renton, WA: Great Options for Every Lifestyle

Building a Repeatable Sound: How AI Music Agent Helps You Create Music Series, Not Just One-Off Tracks

The Freedom Blueprint: Turning AI Video into a Full-Time Career

Categories

Trending

-

News4 months ago

News4 months agoWho Is Kate Garraway New Partner? The Truth Revealed

-

Net Worth9 months ago

Net Worth9 months agoBrandi Raines Net Worth 2025: Age, Bio, Career, Husband and Children

-

Net Worth10 months ago

Net Worth10 months agoBetsy Grunch Net Worth 2025: Salary, Career, Husband, and Life Story

-

Biography9 months ago

Biography9 months agoSalma Shah Biography: Age, Career, Net Worth, Husband and Children